🙋Can LLMs generate accurate SQL queries?

Several LLM based articles down the line, I wanted to touch upon an important aspect of Large Language Models that people debate about all the time — Can an LLM take over the work of a Data Engineering team?

Through the course of this article, I will attempt to answer this question.

But before we dive in, let me state one thing with absolute certainty — Exposing your data to Large Language models is a double edged sword!

We are already aware of the fact that content generation by LLMs are usually non deterministic; As in similar prompts to the LLM might give varied results. Does this hold true for structured language — such as SQL queries?

Well, the Chase SQL Paper tried to answer this question. Here’s a link to the white paper (It’s worth your time to read it!)

CHASE-SQL Multi-Path Reasoning and Preference Optimized Candidate Selection in Text-to-SQL

Taking inspiration from the white paper, I tried to establish my own LLM based Database Administrator.

Evaluating LLMs on SQL Generation

Following from the Chase SQL paper, I am running an evaluation on the Spider Data set of SQLite databases.

Specifically, I am actually evaluating against a single database — Department Management and only running 10 queries from the test bed to evaluate.

I am doing the evaluation for Open AI and Anthropic because these are the 2 giants with prolific usage across organizations.

Before we go under the hood though, in terms of query accuracy, the results of the queries are — 60% Match on query results(Actual vs LLM generated query results).

And in terms of SQL queries generated, the SQL query syntax match percentage is approximately 75%.

But I must also state that I haven’t exactly followed the directions from the Chase SQL paper because most enterprises don’t anyway when they point an LLM at their databases.

I will talk about techniques to improve SQL query accuracy but before we get to that, let’s dive into the code.

The implementation

The first order of business is to download the Spider Dataset which I downloaded from Kaggle with this link —

Having got the databases into a local directory within the project, I now wanted to load a single database and pick 10 query samples from it. Here is the code to do so —

def test_on_spider(spider_path, db_path, spider_test_data):

try:

# Load Spider dataset

spider_data = load_spider_dataset(spider_path)

db_id = spider_data[0]['db_id']

db_path = db_path + db_id + '/' + db_id + ".sqlite"

# Get actual schema for this specific database

actual_schema = get_actual_schema(db_path)

spider_test_data = load_spider_test_dataset(spider_test_data)

# Filter for department_management database

dept_management_data = [item for item in spider_test_data if item['db_id'] == 'department_management']

# Extract questions and actual queries

test_questions = [item['question'] for item in dept_management_data]

test_actual_queries = [item['query'] for item in dept_management_data]

conn = sqlite3.connect(db_path)

cursor = conn.cursor()

results = []

for i, (question, actual_query) in enumerate(zip(test_questions, test_actual_queries)):

if i > 9: # Limit to 10 questions for testing

break

print(f"\nQuestion {i+1}: {question}")

print(f"Actual SQL query: {actual_query}")

# Generate query using Chase SQL

generated_query = divide_and_conquer(question, actual_schema)

print(f"Generated SQL query: {generated_query}")

# Calculate query similarity

similarity = query_similarity(generated_query, actual_query)

print(f"Query similarity: {similarity:.2%}")

# Execute both queries and compare results

try:

cursor.execute(generated_query)

generated_result = cursor.fetchall()

cursor.execute(actual_query)

actual_result = cursor.fetchall()

# Compare results

results_match = (generated_result == actual_result)

results.append({

'question': question,

'generated_query': generated_query,

'actual_query': actual_query,

'results_match': results_match,

'query_similarity': similarity,

'generated_result': generated_result,

'actual_result': actual_result

})

print(f"Results match: {results_match}")

print(f"Generated result: {generated_result}")

print(f"Actual result: {actual_result}")

except sqlite3.Error as e:

print(f"Error executing SQL: {e}")

results.append({

'question': question,

'generated_query': generated_query,

'actual_query': actual_query,

'results_match': False,

'query_similarity': similarity,

'error': str(e)

})

# Compute overall statistics

total_queries = len(results)

correct_queries = sum(1 for r in results if r['results_match'])

accuracy = correct_queries / total_queries if total_queries > 0 else 0

avg_similarity = sum(r['query_similarity'] for r in results) / total_queries

print(f"\nOverall Results:")

print(f"Total Queries: {total_queries}")

print(f"Correct Queries: {correct_queries}")

print(f"Accuracy: {accuracy:.2%}")

print(f"Average Query Similarity: {avg_similarity:.2%}")

# Analyze types of errors

error_types = {}

for r in results:

if not r['results_match']:

if 'error' in r:

error_type = r['error'].split(':')[0]

else:

error_type = 'Incorrect Result'

error_types[error_type] = error_types.get(error_type, 0) + 1

print("\nError Types:")

for error_type, count in error_types.items():

print(f"{error_type}: {count} ({count/total_queries:.2%})")

# Find best and worst performing queries

best_query = max(results, key=lambda r: r['query_similarity'])

worst_query = min(results, key=lambda r: r['query_similarity'])

print("\nBest Performing Query:")

print(f"Question: {best_query['question']}")

print(f"Generated Query: {best_query['generated_query']}")

print(f"Actual Query: {best_query['actual_query']}")

print(f"Similarity: {best_query['query_similarity']:.2%}")

print("\nWorst Performing Query:")

print(f"Question: {worst_query['question']}")

print(f"Generated Query: {worst_query['generated_query']}")

print(f"Actual Query: {worst_query['actual_query']}")

print(f"Similarity: {worst_query['query_similarity']:.2%}")

except Exception as e:

print(f"An error occurred in test_on_spider: {e}")

import traceback

traceback.print_exc()

finally:

if conn:

conn.close()

return resultsThis function does a lot. Let’s go step by step.

1 — Load the Department Management Dataset

Load this specific database. There are several SQLite databases downloaded with the Spider Dataset but I just picked one for evaluation.

We first call the Get Actual Schema function to extract the Database schema —

def get_actual_schema(db_path):

conn = sqlite3.connect(db_path)

cursor = conn.cursor()

cursor.execute("SELECT name FROM sqlite_master WHERE type='table';")

tables = [row[0] for row in cursor.fetchall()]

schema = {'table_names': tables, 'column_names': [], 'column_types': []}

for table in tables:

cursor.execute(f"PRAGMA table_info({table})")

for col in cursor.fetchall():

schema['column_names'].append([tables.index(table), col[1]])

schema['column_types'].append(col[2])

conn.close()

return schemaWe extract it from the actual database which we have already created.

2 — Load the actual dataset

Load the actual spider dataset stored in the dataset directory in json format —

def load_spider_test_dataset(spider_test_data):

with open(spider_test_data) as f:

return json.load(f)This is the sample from the json —

[

{

"db_id": "department_management",

"query": "SELECT count(*) FROM head WHERE age > 56",

"query_toks": [

"SELECT",

"count",

"(",

"*",

")",

"FROM",

"head",

"WHERE",

"age",

">",

"56"

],

"query_toks_no_value": [

"select",

"count",

"(",

"*",

")",

"from",

"head",

"where",

"age",

">",

"value"

],

"question": "How many heads of the departments are older than 56 ?",

"question_toks": [

"How",

"many",

"heads",

"of",

"the",

"departments",

"are",

"older",

"than",

"56",

"?"

],As you can see it has actual queries and results to evaluate the expected result.

# Filter for department_management database

dept_management_data = [item for item in spider_test_data if item['db_id'] == 'department_management']

# Extract questions and actual queries

test_questions = [item['question'] for item in dept_management_data]

test_actual_queries = [item['query'] for item in dept_management_data]These lines get the test natural questions and actual queries.

3 — Run the query using the LLM

Using the natural language question, run a query against the test database —

generated_query = divide_and_conquer(question, actual_schema)This function provides the schema to the LLM and asks it to generate an SQL query. This is the function definition —

def divide_and_conquer(question, schema):

# Create a structured representation of the schema

schema_info = "Database Schema:\n"

for table_id, table in enumerate(schema['table_names']):

schema_info += f"Table: {table}\n"

columns = [column[1] for column in schema['column_names'] if column[0] == table_id]

for col in columns:

col_index = columns.index(col)

col_type = schema['column_types'][col_index]

schema_info += f" - {col} ({col_type})"

schema_info += "\n"

schema_info += "\n"

# Generate the SQL query

prompt = f"""

{schema_info}

Given the above database schema, generate a SQL query pertaining to the provided schema to answer the following question:

{question}

IMPORTANT:

1. DO NOT MAKE UP COLUMN NAMES. USE ONLY THE PROVIDED TABLE NAMES AND ASSOCIATED COUMNS.

2. Ensure the query is syntactically correct and complete for SQLite.

3. Include joins where necessary based on the foreign key relationships.

4. Use SQLite date functions for any date calculations (e.g., strftime, date, julianday).

5. Generate the query only, don't add any additional text or formatting.

6. Don't use strftime to modify date fields in the query, select dates as is.

"""

sql_query = llm_generate(prompt)

sql_query = sql_query.replace("```sql", "")

sql_query = sql_query.replace("```", "")

return sql_queryThe generated SQL query is returned to the test_on_spider function.

4 — Compare queries and results

The next few lines run the actual query from the test json and the LLM generated query against the database, then compares the results —

# Calculate query similarity

similarity = query_similarity(generated_query, actual_query)

print(f"Query similarity: {similarity:.2%}")

# Execute both queries and compare results

try:

cursor.execute(generated_query)

generated_result = cursor.fetchall()

cursor.execute(actual_query)

actual_result = cursor.fetchall()

# Compare results

results_match = (generated_result == actual_result)

results.append({

'question': question,

'generated_query': generated_query,

'actual_query': actual_query,

'results_match': results_match,

'query_similarity': similarity,

'generated_result': generated_result,

'actual_result': actual_result

})

print(f"Results match: {results_match}")

print(f"Generated result: {generated_result}")

print(f"Actual result: {actual_result}")And finally, we aggregate the results —

# Compute overall statistics

total_queries = len(results)

correct_queries = sum(1 for r in results if r['results_match'])

accuracy = correct_queries / total_queries if total_queries > 0 else 0

avg_similarity = sum(r['query_similarity'] for r in results) / total_queries

print(f"\nOverall Results:")

print(f"Total Queries: {total_queries}")

print(f"Correct Queries: {correct_queries}")

print(f"Accuracy: {accuracy:.2%}")

print(f"Average Query Similarity: {avg_similarity:.2%}")

# Analyze types of errors

error_types = {}

for r in results:

if not r['results_match']:

if 'error' in r:

error_type = r['error'].split(':')[0]

else:

error_type = 'Incorrect Result'

error_types[error_type] = error_types.get(error_type, 0) + 1

print("\nError Types:")

for error_type, count in error_types.items():

print(f"{error_type}: {count} ({count/total_queries:.2%})")

# Find best and worst performing queries

best_query = max(results, key=lambda r: r['query_similarity'])

worst_query = min(results, key=lambda r: r['query_similarity'])

print("\nBest Performing Query:")

print(f"Question: {best_query['question']}")

print(f"Generated Query: {best_query['generated_query']}")

print(f"Actual Query: {best_query['actual_query']}")

print(f"Similarity: {best_query['query_similarity']:.2%}")

print("\nWorst Performing Query:")

print(f"Question: {worst_query['question']}")

print(f"Generated Query: {worst_query['generated_query']}")

print(f"Actual Query: {worst_query['actual_query']}")

print(f"Similarity: {worst_query['query_similarity']:.2%}")This is the overall result with Anthropic (claude-3-sonnet) —

Question 1: How many heads of the departments are older than 56 ?

Actual SQL query: SELECT count(*) FROM head WHERE age > 56

Generated SQL query: SELECT COUNT(*)

FROM head h

JOIN management m ON h.head_ID = m.head_ID

WHERE h.age > 56;

Query similarity: 62.50%

Results match: False

Generated result: [(3,)]

Actual result: [(5,)]

Question 2: List the name, born state and age of the heads of departments ordered by age.

Actual SQL query: SELECT name , born_state , age FROM head ORDER BY age

Generated SQL query: SELECT h.name, h.born_state, h.age

FROM head h

JOIN management m ON h.head_ID = m.head_ID

ORDER BY h.age;

Query similarity: 64.56%

Results match: False

Generated result: [('Dudley Hart', 'California', 52.0), ('Jeff Maggert', 'Delaware', 53.0), ('Franklin Langham', 'Connecticut', 67.0), ('Billy Mayfair', 'California', 69.0), ('K. J. Choi', 'Alabama', 69.0)]

Actual result: [('Pádraig Harrington', 'Connecticut', 43.0), ('Stewart Cink', 'Florida', 50.0), ('Dudley Hart', 'California', 52.0), ('Jeff Maggert', 'Delaware', 53.0), ('Nick Faldo', 'California', 56.0), ('Tiger Woods', 'Alabama', 67.0), ('Franklin Langham', 'Connecticut', 67.0), ('Sergio García', 'California', 68.0), ('K. J. Choi', 'Alabama', 69.0), ('Billy Mayfair', 'California', 69.0)]

Question 3: List the creation year, name and budget of each department.

Actual SQL query: SELECT creation , name , budget_in_billions FROM department

Generated SQL query: SELECT Creation, Name, Budget_in_Billions

FROM department;

Query similarity: 97.44%

Results match: True

Generated result: [('1789', 'State', 9.96), ('1789', 'Treasury', 11.1), ('1947', 'Defense', 439.3), ('1870', 'Justice', 23.4), ('1849', 'Interior', 10.7), ('1889', 'Agriculture', 77.6), ('1903', 'Commerce', 6.2), ('1913', 'Labor', 59.7), ('1953', 'Health and Human Services', 543.2), ('1965', 'Housing and Urban Development', 46.2), ('1966', 'Transportation', 58.0), ('1977', 'Energy', 21.5), ('1979', 'Education', 62.8), ('1989', 'Veterans Affairs', 73.2), ('2002', 'Homeland Security', 44.6)]

Actual result: [('1789', 'State', 9.96), ('1789', 'Treasury', 11.1), ('1947', 'Defense', 439.3), ('1870', 'Justice', 23.4), ('1849', 'Interior', 10.7), ('1889', 'Agriculture', 77.6), ('1903', 'Commerce', 6.2), ('1913', 'Labor', 59.7), ('1953', 'Health and Human Services', 543.2), ('1965', 'Housing and Urban Development', 46.2), ('1966', 'Transportation', 58.0), ('1977', 'Energy', 21.5), ('1979', 'Education', 62.8), ('1989', 'Veterans Affairs', 73.2), ('2002', 'Homeland Security', 44.6)]

Question 4: What are the maximum and minimum budget of the departments?

Actual SQL query: SELECT max(budget_in_billions) , min(budget_in_billions) FROM department

Generated SQL query: SELECT MAX(Budget_in_Billions) AS max_budget, MIN(Budget_in_Billions) AS min_budget

FROM department;

Query similarity: 83.72%

Results match: True

Generated result: [(543.2, 6.2)]

Actual result: [(543.2, 6.2)]

Question 5: What is the average number of employees of the departments whose rank is between 10 and 15?

Actual SQL query: SELECT avg(num_employees) FROM department WHERE ranking BETWEEN 10 AND 15

Generated SQL query: SELECT AVG(Num_Employees) AS avg_num_employees

FROM department

WHERE Ranking BETWEEN 10 AND 15;

Query similarity: 86.90%

Results match: True

Generated result: [(105468.16666666667,)]

Actual result: [(105468.16666666667,)]

Question 6: What are the names of the heads who are born outside the California state?

Actual SQL query: SELECT name FROM head WHERE born_state != 'California'

Generated SQL query: SELECT h.name

FROM head h

JOIN management m ON h.head_ID = m.head_ID

WHERE h.born_state <> 'California';

Query similarity: 65.82%

Results match: False

Generated result: [('Jeff Maggert',), ('K. J. Choi',), ('Franklin Langham',)]

Actual result: [('Tiger Woods',), ('K. J. Choi',), ('Jeff Maggert',), ('Stewart Cink',), ('Pádraig Harrington',), ('Franklin Langham',)]

Question 7: What are the distinct creation years of the departments managed by a secretary born in state 'Alabama'?

Actual SQL query: SELECT DISTINCT T1.creation FROM department AS T1 JOIN management AS T2 ON T1.department_id = T2.department_id JOIN head AS T3 ON T2.head_id = T3.head_id WHERE T3.born_state = 'Alabama'

Generated SQL query: SELECT DISTINCT strftime('%Y', Creation) AS creation_year

FROM department d

JOIN management m ON d.Department_ID = m.department_ID

JOIN head h ON m.head_ID = h.head_ID

WHERE h.born_state = 'Alabama';

Query similarity: 82.29%

Results match: False

Generated result: [('-4707',)]

Actual result: [('1903',)]

Question 8: What are the names of the states where at least 3 heads were born?

Actual SQL query: SELECT born_state FROM head GROUP BY born_state HAVING count(*) >= 3

Generated SQL query: SELECT born_state, COUNT(*) AS count

FROM head

GROUP BY born_state

HAVING COUNT(*) >= 3;

Query similarity: 87.18%

Results match: False

Generated result: [('California', 4)]

Actual result: [('California',)]

Question 9: In which year were most departments established?

Actual SQL query: SELECT creation FROM department GROUP BY creation ORDER BY count(*) DESC LIMIT 1

Generated SQL query: SELECT strftime('%Y', Creation) AS Year, COUNT(*) AS Num_Departments

FROM department

GROUP BY Year

ORDER BY Num_Departments DESC

LIMIT 1;

Query similarity: 63.59%

Results match: False

Generated result: [('-4707', 12)]

Actual result: [('1789',)]

Question 10: Show the name and number of employees for the departments managed by heads whose temporary acting value is 'Yes'?

Actual SQL query: SELECT T1.name , T1.num_employees FROM department AS T1 JOIN management AS T2 ON T1.department_id = T2.department_id WHERE T2.temporary_acting = 'Yes'

Generated SQL query: SELECT d.Name, d.Num_Employees

FROM department d

JOIN management m ON d.Department_ID = m.department_ID

JOIN head h ON m.head_ID = h.head_ID

WHERE m.temporary_acting = 'Yes';

Query similarity: 79.63%

Results match: True

Generated result: [('Treasury', 115897.0), ('Homeland Security', 208000.0), ('Treasury', 115897.0)]

Actual result: [('Treasury', 115897.0), ('Homeland Security', 208000.0), ('Treasury', 115897.0)]

Overall Results:

Total Queries: 10

Correct Queries: 4

Accuracy: 40.00%

Average Query Similarity: 77.36%

Error Types:

Incorrect Result: 6 (60.00%)

Best Performing Query:

Question: List the creation year, name and budget of each department.

Generated Query: SELECT Creation, Name, Budget_in_Billions

FROM department;

Actual Query: SELECT creation , name , budget_in_billions FROM department

Similarity: 97.44%

Worst Performing Query:

Question: How many heads of the departments are older than 56 ?

Generated Query: SELECT COUNT(*)

FROM head h

JOIN management m ON h.head_ID = m.head_ID

WHERE h.age > 56;

Actual Query: SELECT count(*) FROM head WHERE age > 56

Similarity: 62.50%These are the results with Open AI(gpt-4o-mini) —

Question 1: How many heads of the departments are older than 56 ?

Actual SQL query: SELECT count(*) FROM head WHERE age > 56

Generated SQL query: SELECT COUNT(*)

FROM head

WHERE age > 56;

Query similarity: 98.77%

Results match: True

Generated result: [(5,)]

Actual result: [(5,)]

Question 2: List the name, born state and age of the heads of departments ordered by age.

Actual SQL query: SELECT name , born_state , age FROM head ORDER BY age

Generated SQL query: SELECT head.name, head.born_state, head.age

FROM head

JOIN management ON head.head_ID = management.head_ID

JOIN department ON management.department_ID = department.Department_ID

ORDER BY head.age;

Query similarity: 40.96%

Results match: False

Generated result: [('Dudley Hart', 'California', 52.0), ('Jeff Maggert', 'Delaware', 53.0), ('Franklin Langham', 'Connecticut', 67.0), ('Billy Mayfair', 'California', 69.0), ('K. J. Choi', 'Alabama', 69.0)]

Actual result: [('Pádraig Harrington', 'Connecticut', 43.0), ('Stewart Cink', 'Florida', 50.0), ('Dudley Hart', 'California', 52.0), ('Jeff Maggert', 'Delaware', 53.0), ('Nick Faldo', 'California', 56.0), ('Tiger Woods', 'Alabama', 67.0), ('Franklin Langham', 'Connecticut', 67.0), ('Sergio García', 'California', 68.0), ('K. J. Choi', 'Alabama', 69.0), ('Billy Mayfair', 'California', 69.0)]

Question 3: List the creation year, name and budget of each department.

Actual SQL query: SELECT creation , name , budget_in_billions FROM department

Generated SQL query: SELECT Creation, Name, Budget_in_Billions FROM department;

Query similarity: 97.44%

Results match: True

Generated result: [('1789', 'State', 9.96), ('1789', 'Treasury', 11.1), ('1947', 'Defense', 439.3), ('1870', 'Justice', 23.4), ('1849', 'Interior', 10.7), ('1889', 'Agriculture', 77.6), ('1903', 'Commerce', 6.2), ('1913', 'Labor', 59.7), ('1953', 'Health and Human Services', 543.2), ('1965', 'Housing and Urban Development', 46.2), ('1966', 'Transportation', 58.0), ('1977', 'Energy', 21.5), ('1979', 'Education', 62.8), ('1989', 'Veterans Affairs', 73.2), ('2002', 'Homeland Security', 44.6)]

Actual result: [('1789', 'State', 9.96), ('1789', 'Treasury', 11.1), ('1947', 'Defense', 439.3), ('1870', 'Justice', 23.4), ('1849', 'Interior', 10.7), ('1889', 'Agriculture', 77.6), ('1903', 'Commerce', 6.2), ('1913', 'Labor', 59.7), ('1953', 'Health and Human Services', 543.2), ('1965', 'Housing and Urban Development', 46.2), ('1966', 'Transportation', 58.0), ('1977', 'Energy', 21.5), ('1979', 'Education', 62.8), ('1989', 'Veterans Affairs', 73.2), ('2002', 'Homeland Security', 44.6)]

Question 4: What are the maximum and minimum budget of the departments?

Actual SQL query: SELECT max(budget_in_billions) , min(budget_in_billions) FROM department

Generated SQL query: SELECT MAX(Budget_in_Billions) AS Max_Budget, MIN(Budget_in_Billions) AS Min_Budget FROM department;

Query similarity: 83.72%

Results match: True

Generated result: [(543.2, 6.2)]

Actual result: [(543.2, 6.2)]

Question 5: What is the average number of employees of the departments whose rank is between 10 and 15?

Actual SQL query: SELECT avg(num_employees) FROM department WHERE ranking BETWEEN 10 AND 15

Generated SQL query: SELECT AVG(Num_Employees) AS average_num_employees

FROM department

WHERE Ranking BETWEEN 10 AND 15;

Query similarity: 84.88%

Results match: True

Generated result: [(105468.16666666667,)]

Actual result: [(105468.16666666667,)]

Question 6: What are the names of the heads who are born outside the California state?

Actual SQL query: SELECT name FROM head WHERE born_state != 'California'

Generated SQL query: SELECT head.name

FROM head

WHERE head.born_state <> 'California';

Query similarity: 87.39%

Results match: True

Generated result: [('Tiger Woods',), ('K. J. Choi',), ('Jeff Maggert',), ('Stewart Cink',), ('Pádraig Harrington',), ('Franklin Langham',)]

Actual result: [('Tiger Woods',), ('K. J. Choi',), ('Jeff Maggert',), ('Stewart Cink',), ('Pádraig Harrington',), ('Franklin Langham',)]

Question 7: What are the distinct creation years of the departments managed by a secretary born in state 'Alabama'?

Actual SQL query: SELECT DISTINCT T1.creation FROM department AS T1 JOIN management AS T2 ON T1.department_id = T2.department_id JOIN head AS T3 ON T2.head_id = T3.head_id WHERE T3.born_state = 'Alabama'

Generated SQL query: SELECT DISTINCT Creation

FROM department

JOIN management ON department.Department_ID = management.department_ID

JOIN head ON management.head_ID = head.head_ID

WHERE head.born_state = 'Alabama';

Query similarity: 83.07%

Results match: True

Generated result: [('1903',)]

Actual result: [('1903',)]

Question 8: What are the names of the states where at least 3 heads were born?

Actual SQL query: SELECT born_state FROM head GROUP BY born_state HAVING count(*) >= 3

Generated SQL query: SELECT born_state

FROM head

GROUP BY born_state

HAVING COUNT(head_ID) >= 3;

Query similarity: 93.71%

Results match: True

Generated result: [('California',)]

Actual result: [('California',)]

Question 9: In which year were most departments established?

Actual SQL query: SELECT creation FROM department GROUP BY creation ORDER BY count(*) DESC LIMIT 1

Generated SQL query: SELECT strftime('%Y', Creation) AS establishment_year, COUNT(*) AS department_count

FROM department

GROUP BY establishment_year

ORDER BY department_count DESC

LIMIT 1;

Query similarity: 57.49%

Results match: False

Generated result: [('-4707', 12)]

Actual result: [('1789',)]

Question 10: Show the name and number of employees for the departments managed by heads whose temporary acting value is 'Yes'?

Actual SQL query: SELECT T1.name , T1.num_employees FROM department AS T1 JOIN management AS T2 ON T1.department_id = T2.department_id WHERE T2.temporary_acting = 'Yes'

Generated SQL query: SELECT d.Name, d.Num_Employees

FROM department d

JOIN management m ON d.Department_ID = m.department_ID

WHERE m.temporary_acting = 'Yes';

Query similarity: 89.90%

Results match: True

Generated result: [('Treasury', 115897.0), ('Homeland Security', 208000.0), ('Treasury', 115897.0)]

Actual result: [('Treasury', 115897.0), ('Homeland Security', 208000.0), ('Treasury', 115897.0)]

Overall Results:

Total Queries: 10

Correct Queries: 8

Accuracy: 80.00%

Average Query Similarity: 81.73%

Error Types:

Incorrect Result: 2 (20.00%)

Best Performing Query:

Question: How many heads of the departments are older than 56 ?

Generated Query: SELECT COUNT(*)

FROM head

WHERE age > 56;

Actual Query: SELECT count(*) FROM head WHERE age > 56

Similarity: 98.77%

Worst Performing Query:

Question: List the name, born state and age of the heads of departments ordered by age.

Generated Query: SELECT head.name, head.born_state, head.age

FROM head

JOIN management ON head.head_ID = management.head_ID

JOIN department ON management.department_ID = department.Department_ID

ORDER BY head.age;

Actual Query: SELECT name , born_state , age FROM head ORDER BY age

Similarity: 40.96%As you can see, Open AI performance was better in query results. However, this is by no means indicative of a particular LLM’s capability.

For that, we need to conduct some more elaborate tests and perform benchmarking — And this exercise is not mean to compare LLMs.

So what insights can we derive from these results?

- Query accuracy is worst when the queries are too complex

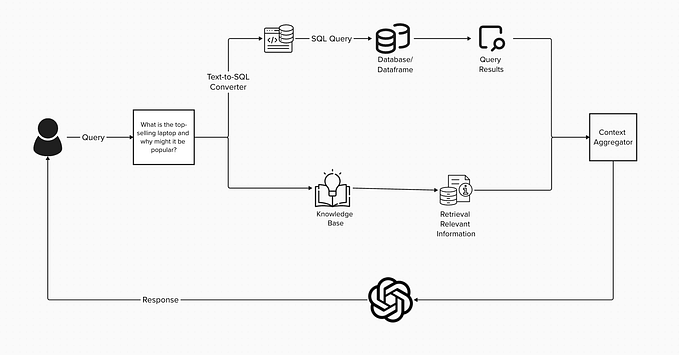

- Without contextualization (RAG) query performance isn’t great

- Relying directly on LLMs to create SQL queries isn’t 100% reliable, not even if I were to follow the Chase SQL recommended workflow

So how do we improve query reliability, accuracy and performance?

Ways to improve LLM query accuracy

- Create a Vector database of mapping natural language queries against actual queries run against your actual databases on actual production systems.

- Follow elaborate agentic workflows using Chain of Thought Prompting to improve query performance.

- It helps to give LLMs access to Data Catalogs or dictionaries so that they can make better sense of the domain.

- Fine Tune an LLM on your Query database and use that LLM with a RAG architecture and Agentic workflows with COT prompts (Best approach)

- Fine tune LLMs using RLHF (Reinforcement learning from Human feedback)

These are some reliable approaches to improve LLM query performance.

Don'ts

- Absolutely do not rely on LLMs to run mutation queries on the database unless there is a time travel feature (like in snowflake) or some rollback mechanism

- Do not use LLM based queries to query data with overly complex relationships — LLMs find it easier to generate simple select statements with Counts, SUMS, etc. especially on single tables.

- Do not make promises of 100% query accuracy. That just cannot be guaranteed.

- Do not think that an LLM can solve your Data Engineering problems. It can be a tool to assist you but never to replace entire pipelines.

- Never point your LLM to Sensitive, Confidential, Private or Proprietary data. Even if you have to, make sure the LLM is completely local and you have enough security safeguards and guardrails in place to prevent data leaks.

Do’s

- Always add logs and metrics to check LLM query accuracy and performance.

- Use LLMs to add create simple queries for non critical systems.

- If you really feel like automating Select queries using LLMs, I instead recommend pointing the LLM to a Graph QL or ORM interface.

- Always give context of your own actual queries to the LLM so it can understand the domain and nature of existing queries.

Where LLM’s do well

- Generating DDLs

- Generating a Data Catalogue and Data dictionary

- Single table simple select statements

- Summarizing results from SQL query results

- Benchmarking and performance testing of databases

Summary

This article isn’t meant to be a scathing indictment of LLM based SQL query generation.

On the contrary, I wanted to present a more balanced or nuanced perspective, perhaps pointing out limitations or challenges without completely discrediting the approach.

LLMs are great at putting 2 and 2 together in most cases and there is no harm in automating some parts of your pipelines (especially the non critical ones). In fact, you can even generate nice looking visualizations from query results which would be useful to create insights on perhaps — Log Tables, Audit Tables, etc.

There are a lot of options available in the market; With LLM offerings from OpenAI, Meta, Anthropic & Google, it is hard to make a choice — and this is why evaluation becomes important.

By evaluating each LLM’s performance on your specific workloads — such as generating SQL, summarizing data, or interpreting domain-specific queries — you can identify the best fit for your needs. In some cases, a hybrid approach, where specific query types are routed to the most suitable LLM, can deliver optimal accuracy and cost-efficiency.

Follow me Ritesh Shergill

for more articles on

🤖AI/ML

👨💻 Tech

👩🎓 Career advice

📲 User Experience

🏆 Leadership

You can follow me on LinkedIn where I post thought pieces and technical insights regularly.

I also do

✅ Career Guidance counselling — https://topmate.io/ritesh_shergill/149890

✅ Mentor Startups as a Fractional CTO — https://topmate.io/ritesh_shergill/193786